The Pace That Concerns Me

I remember when DeepSeek was released, and how amazed people were by its capabilities. But in the software engineering world I inhabit, I had already experienced similar capabilities with OpenAI's models months earlier. What strikes me now isn't just the power of these models, but the breathtaking pace at which they're evolving.

Just consider this timeline: We went from watching OpenAI cautiously preview their reasoning-focused model O1 last year to what we're experiencing now in early 2025. Back then, OpenAI would release a model, test it extensively, then gradually open it to a small group of verified researchers before a wider release. The process took months, with safety guardrails being carefully constructed along the way.

Fast forward to today, and that careful pace has been abandoned. In just the past few weeks, we've seen Claude 3.7, Grok3, GPT-4.5, and numerous other models released in rapid succession. What used to take half a year now happens in weeks. The pace of innovation has become exponential.

My Journey with Claude: From 3.5 to 3.7

As a software engineer working on open-source projects, I've had a front-row seat to this acceleration. Claude 3.5 Sonnet was already impressive - it consistently helped me improve code quality and handle edge cases in my open-sourced projects. I could feed it chunks of code, and it would identify inefficiencies or potential bugs that might have taken me hours to spot.

Then came Claude 3.7 Sonnet. The jump in capability was stunning. Its extended reasoning capabilities mean it can think through complex problems before responding, resulting in significantly more robust code reviews and suggestions. What impressed me most was how it could reason about potential race conditions and edge cases that weren't immediately obvious from the code. It wasn't just looking at syntax; it was truly reasoning about how the code would behave in real-world scenarios.

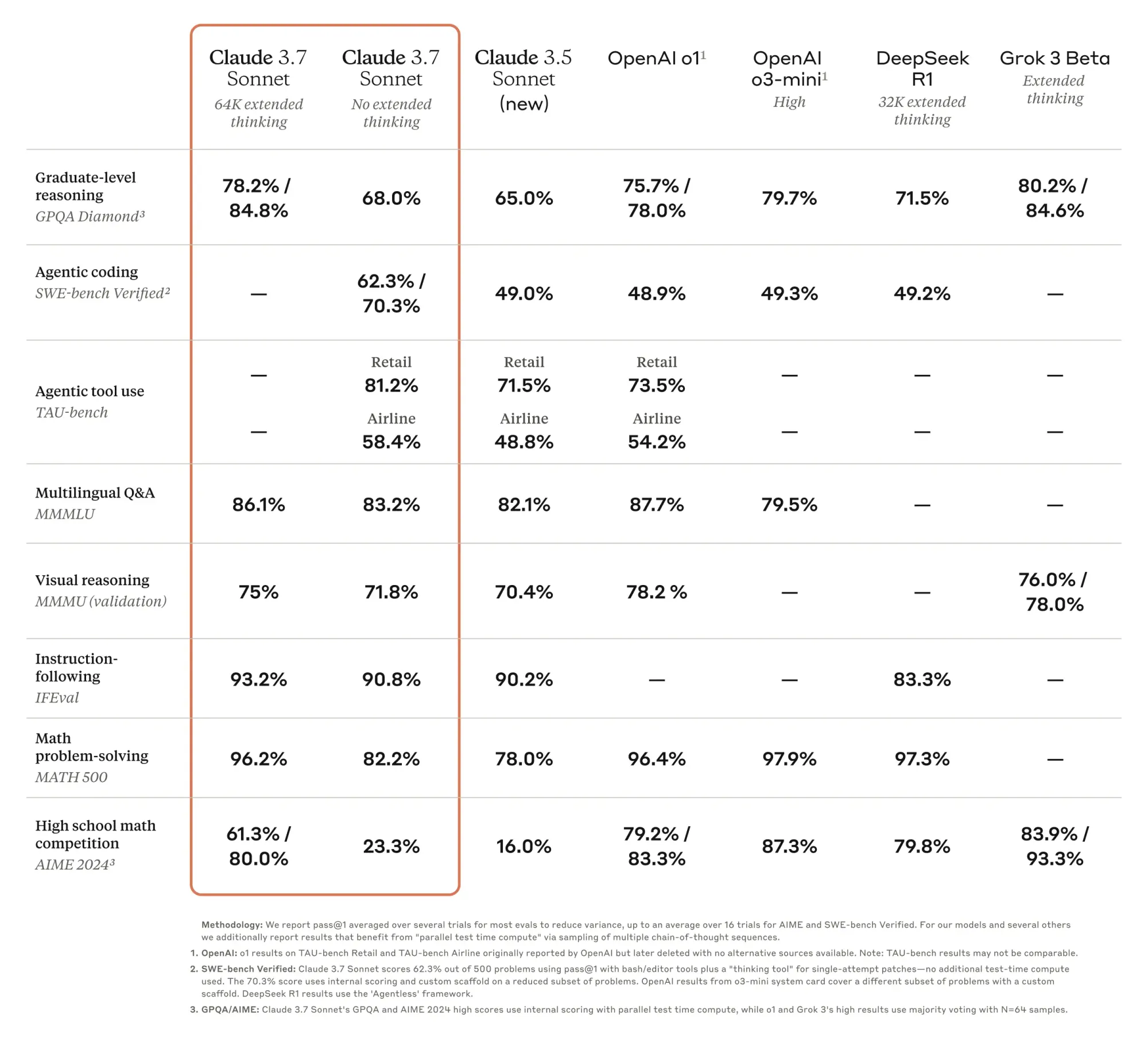

This advancement isn't just subjective experience. Claude 3.7 scored 70.3% on software engineering benchmarks, handily outperforming its predecessor. It writes cleaner, more idiomatic code with fewer errors. For my open-source work, it's been transformative - like having a tireless senior engineer reviewing every line I write.

The Global Race: No One at the Brake

What concerns me isn't the technology itself - it's that no one seems to be pumping the brakes. We're witnessing what feels like a global AI arms race, with companies in both the US and China pushing development at unprecedented speeds.

It reminds me of the Manhattan Project and Oppenheimer's eventual moral reckoning. After witnessing the destructive power of nuclear weapons, Oppenheimer was left haunted by his contribution, famously lamenting:

Mr. President, I feel I have blood on my hands

Despite initially pursuing the technology with conviction and patriotic fervor, he spent the remainder of his life wrestling with the devastating human cost of his scientific achievement.

Today's AI acceleration mirrors this trajectory in concerning ways. The rapid pace of releasing new models isn't merely introducing just a new model; it represented a fundamental shift away from cautious, measured development toward a rush to open-source powerful capabilities, lower training costs, and eliminate barriers to entry. Just as the nuclear scientists couldn't fully anticipate the long-term consequences of their work until it was too late, I wonder if we're collectively underestimating the transformative impact of increasingly powerful AI systems being deployed at unprecedented speed. Will today's AI pioneers someday look back with similar regret, wondering if they should have moved more carefully when they had the chance?

Concrete AI Safety Concerns

While the capabilities of modern AI systems are remarkable, they also introduce specific risks that deserve our attention. These aren't theoretical concerns - we've already witnessed real-world examples of AI systems causing harm when deployed without adequate safeguards.

Misinformation is perhaps the most visible threat. AI can now generate convincing fake content at scale, creating potential for widespread deception. In 2022, a manipulated deepfake video appeared showing Ukraine's President Zelenskyy supposedly urging his troops to surrender. Though this crude attempt was quickly debunked due to visual inconsistencies, it signaled the coming wave of more sophisticated AI-driven deception. As these models improve, distinguishing truth from AI-generated falsehood becomes increasingly difficult.

Bias in AI decision-making represents another significant risk. These models learn from historical data, inevitably absorbing and potentially amplifying human biases. Amazon experienced this firsthand when their AI hiring tool was found to be systematically downgrading female candidates. The algorithm had taught itself that male candidates were preferable based on the predominantly male résumés it was trained on. Despite Amazon's efforts to fix this bias, they ultimately scrapped the tool, recognizing how AI can inadvertently perpetuate discrimination.

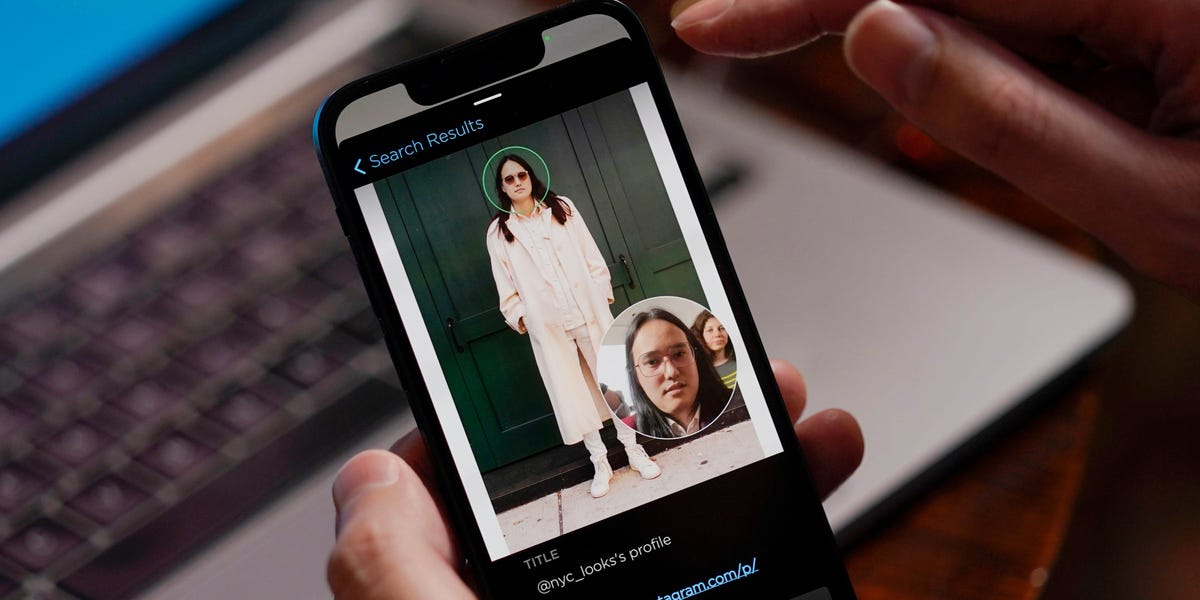

Privacy breaches add another layer of concern. AI systems often require vast amounts of personal data, raising questions about consent and surveillance. Clearview AI exemplifies this problem – they scraped billions of images from social media to build a massive facial recognition database without users' knowledge or permission. This created what critics called a "perpetual police lineup" where anyone's face could be tracked. The resulting backlash led to legal action and fines in multiple countries, highlighting the tension between AI capabilities and privacy rights.

Security vulnerabilities unique to AI systems also deserve attention. Researchers have demonstrated how malicious actors might exploit the way AI perceives the world – placing small stickers on a stop sign can cause computer vision systems to misinterpret it as a speed limit sign. In a self-driving vehicle, such manipulations could have life-threatening consequences. Similarly, attackers might find ways to make AI models reveal sensitive information or behave erratically, creating new attack vectors for bad actors.

The Job Displacement Question

Let me be clear: I'm not anti-technology. As a software engineer, I enjoy these advancements more than most can imagine. But I do wonder about the societal impact when development outpaces adaptation. Last year, I might have told my friends that jobs like designers had about five years before AI significantly redefined their profession. Now, looking at the acceleration curve, that timeline seems too optimistic.

The scale of potential disruption is sobering. Recent analysis by Goldman Sachs projects that AI could impact 300 million full-time jobs worldwide, automating a quarter of work tasks in the U.S. and Europe. While many of these jobs won't disappear entirely, they will be radically transformed.

This goes beyond the typical factory automation we've seen for decades. Today's AI is encroaching on office, retail, and service sectors too. Routine-heavy occupations are squarely in AI's sights: administrative support, basic accounting, data processing, customer service, and even middle-management roles. More surprisingly, advancements in generative AI are putting traditionally "safe" professional jobs at risk – paralegals and junior lawyers, journalists, and even computer programmers like myself are feeling the pressure.

The industries most vulnerable to disruption include:

- Manufacturing and Warehousing: Robots and AI-powered machines performing assembly, packaging, and inventory management with minimal human intervention.

- Transportation: Self-driving technology threatening long-haul truck drivers, delivery drivers, and rideshare operators.

- Customer Service: AI chatbots handling inquiries that previously required call center staff, with one bot potentially replacing many human agents.

- Finance and Accounting: Algorithmic trading and AI risk assessment tools replacing financial analysts, while automated bookkeeping software takes over tasks once done by entry-level accountants.

- Healthcare: While doctors and nurses won't be replaced wholesale, AI diagnostics and robots may reduce the need for technicians and assistants in areas like medical image analysis and routine monitoring.

The challenge is that while new jobs will certainly emerge (data scientists, AI trainers, maintenance specialists), these roles often require different skills or are located in different regions. The people displaced by AI might not be immediately qualified for these new positions, creating a potential skills gap and transition period.

When I see news about AI systems being integrated into government services or medical diagnostics, I recognize the efficiency gains. But I also see the potential for rapid displacement without adequate transition time.

Even Elon Musk has suggested that Nobel Prize-winning research will soon be dominated by AI. While this might sound hyperbolic, the trajectory of improvement makes it harder to dismiss.

The Open Source Dilemma

The open-sourcing of advanced AI models presents a genuine dilemma. On one hand, it democratizes access and prevents monopolistic control by a few tech giants. Alibaba's Qwen QwQ-Max-Preview, for instance, now offers impressive reasoning capabilities and excels in mathematics, coding, and general-domain tasks, while delivering outstanding performance in agent-related workflows. This development helps level the playing field for developers worldwide.

On the other hand, this trend also circumvents crucial safety checkpoints in concerning ways. While major commercial models maintain content safeguards and restrictions, open-source alternatives create a parallel ecosystem with fewer constraints. The technical knowledge for training unfiltered models becomes increasingly accessible, creating a situation where anyone with moderate computing resources can potentially train systems without the ethical guardrails that responsible companies implement.

Early 2023 demonstrated this risk when Meta's LLaMA model leaked online. As sophisticated as some of the best proprietary models, LLaMA spread rapidly among AI enthusiasts, alarming policymakers who worried it could be misused for spam, fraud, malware, and harassment now that it was freely available. Unlike a company API where providers can enforce rules, an open model becomes a tool anyone can wield however they choose.

We've already seen vivid examples of unintended consequences. The notorious GPT-4chan, created by fine-tuning an open model on millions of posts from the extreme online forum 4chan, produced a chatbot that perfectly imitated the toxic, conspiratorial style of the forum's users. When released as an experiment, the bots began spewing racist and hateful messages indistinguishable from real users. If one person could do this as a prank, others with worse intent could do the same to flood social media with bigotry or disinformation.

Image generation has seen similarly troubling misuses. Open models like Stable Diffusion have been modified to create pornographic and deepfake images, including non-consensual explicit images of real people. On Telegram, developers deployed bots using open-source AI to let users upload clothed photos of women and receive fake nudes in return. Researchers found at least 50 such bots with over 4 million users collectively generating enormous numbers of non-consensual pornographic images. Many victims were unaware their pictures were being manipulated this way, resulting in devastating violations of privacy and dignity.

It's like having robust security protocols at major banks while simultaneously publishing detailed instructions for opening vaults. I appreciate the democratizing benefits of open access, but I worry we haven't adequately prepared for a world where powerful AI capabilities become universally accessible before we've solved the governance challenges they present. The question isn't whether harmful applications will emerge—it's how we'll respond when they inevitably do.

Balancing Risks and Innovation

With AI's potential to do both great good and harm, we face a critical question: how do we govern AI responsibly without stifling innovation? This balancing act is exceptionally challenging. If regulation is too lax, society could face serious unintended consequences from untested technologies. But if regulation is too heavy-handed, it might smother beneficial innovations or drive research underground.

One major difficulty is that AI doesn't fit neatly into existing regulatory frameworks. Unlike the automotive industry with its century of safety standards development, modern AI has exploded in just a decade. Consider autonomous vehicles: a human driver must be licensed and obey traffic laws, but what about a self-driving car's AI? Who is held accountable if it makes a wrong decision? This isn't hypothetical – in 2018, a self-driving Uber test vehicle struck and killed a pedestrian in Arizona when its system failed to properly identify the woman crossing the street. The incident led to Uber halting its autonomous vehicle tests and underscored that rushing new technology to market without adequate safety measures can cost lives.

We've also seen how social media algorithms, optimized for engagement, ended up amplifying toxic content and misinformation due to lack of early oversight. Facebook's news feed algorithm was linked to the spread of hate speech in Myanmar, where it "supercharged" ethnic hatred against the Rohingya minority, contributing to a wave of violence and a human rights crisis. Incidents like this reveal that AI-driven systems can have societal-scale impacts on democracies, public health, and security.

Yet, imposing restrictions on AI development raises concerns about hampering innovation and competitiveness. Tech leaders often warn that if regulations are too onerous in one country, AI research will simply shift to another with looser rules. Many advocate for a middle ground: "soft law" approaches like ethical guidelines, industry self-regulation, and transparency measures that allow flexibility as the technology evolves.

Crucially, balancing risk and innovation means engaging AI creators in the process. If researchers and companies are part of shaping sensible standards, they're more likely to adhere to them and find creative solutions that satisfy both safety and progress. The AI research community has begun embedding ethics teams and "red teams" that actively try to break or misuse new AI models to spot flaws before release. This kind of internal checkpoint is innovation-friendly while improving safety.

Finding Balance in the Acceleration

Despite my concerns, I remain cautiously optimistic about working alongside these increasingly capable AI systems. Claude 3.7 hasn't replaced me - it's enhanced my capabilities. My open-source projects have never been in better shape, with cleaner code and fewer bugs thanks to AI assistance.

But I also believe we need thoughtful conversation about governance. The pace of development shouldn't be driven solely by market competition or the desire to be first. We need to ask difficult questions about safety, ethics, and the kind of future we want to build.

Some key considerations for responsible AI governance include:

- Robust testing and certification for AI systems used in high-stakes domains, similar to FDA approval for drugs or FAA certification for aircraft.

- Transparency requirements ensuring AI decisions can be explained and audited for fairness, especially in areas like credit scoring or legal applications.

- Liability frameworks clarifying who is responsible when an AI system causes harm – the developer, the user, or some combination.

- International cooperation on AI norms to prevent a regulatory race-to-the-bottom, potentially including guidelines for AI safety research and deployment.

This isn't about halting progress. It's about ensuring that progress serves humanity's best interests. As someone deeply embedded in the tech community, I want to see innovation continue - but with wisdom and foresight guiding our path forward.

Whatever comes next - whether it's Claude 4.0, GPT-5, or something we haven't yet imagined - I hope we approach it with both enthusiasm for the possibilities and respect for the responsibilities these powerful tools entail.

The AI revolution isn't coming. It's here. And how we navigate it now will shape what it becomes.

Discussion